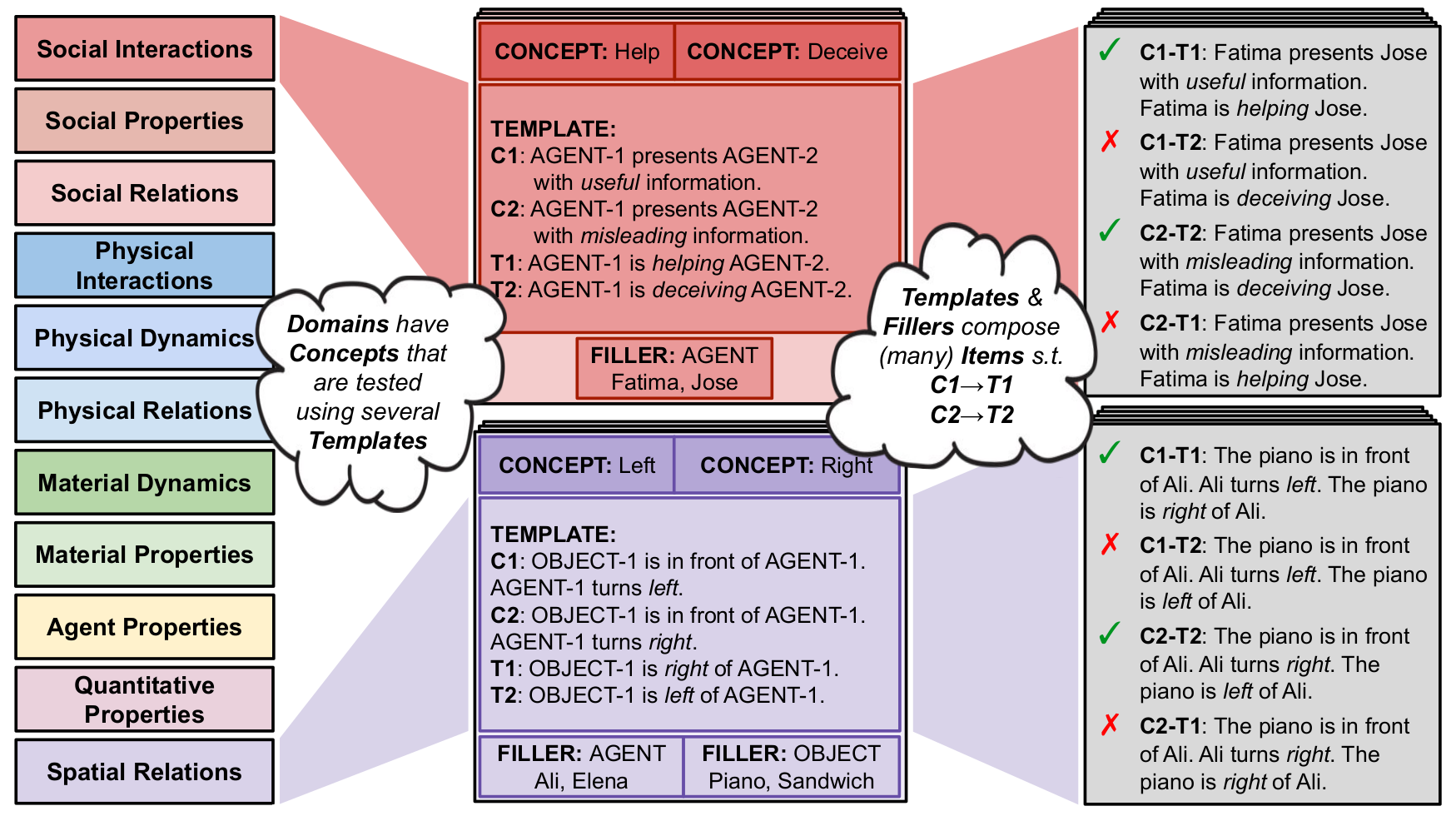

For an intelligent agent to interact with the world, it needs to be able to construct, maintain, and update internal world models (in cognitive science, variants of such world models are also known as mental models or situation models). The extent to which LLMs possess and use internal world models is subject to ongoing investigation. The EWoK framework offers an opportunity to combine individual elements of world knowledge to flexibly construct multi-step scenarios for evaluating world modeling capabilities in LLMs, within and across physical and social knowledge domains. Our framework targets specific cognitive concepts (or concept pairs, such as friend/enemy). Concept knowledge is not limited to definitions, but can be used productively across a wide range of scenarios. Thus concepts leveraged in context are the first-class object of the EWoK framework, as opposed to individual sentences or facts.

EWoK is a flexible framework for evaluating aspects of “world modeling” in language models. Models must use their knowledge of concepts to match a target text with a plausible/implausible context. Each concept is associated with several items that test knowledge of the concept (often by contrasting it with another concept). Items are created in a flexible yet controlled manner using the EWoK dataset generation procedure. At the core of the framework, we have atomic units and combination rules that, subject to constraints, lead to the generation of templates which are then populated with fillers—this leads to generating many more carefully-controlled items than a single-step template-filling approach could generate.

Domains are inspired by literature in cognitive science. Many domains are shown by past research in cognitive neuroscience to recruit dedicated machinery in the human brain.

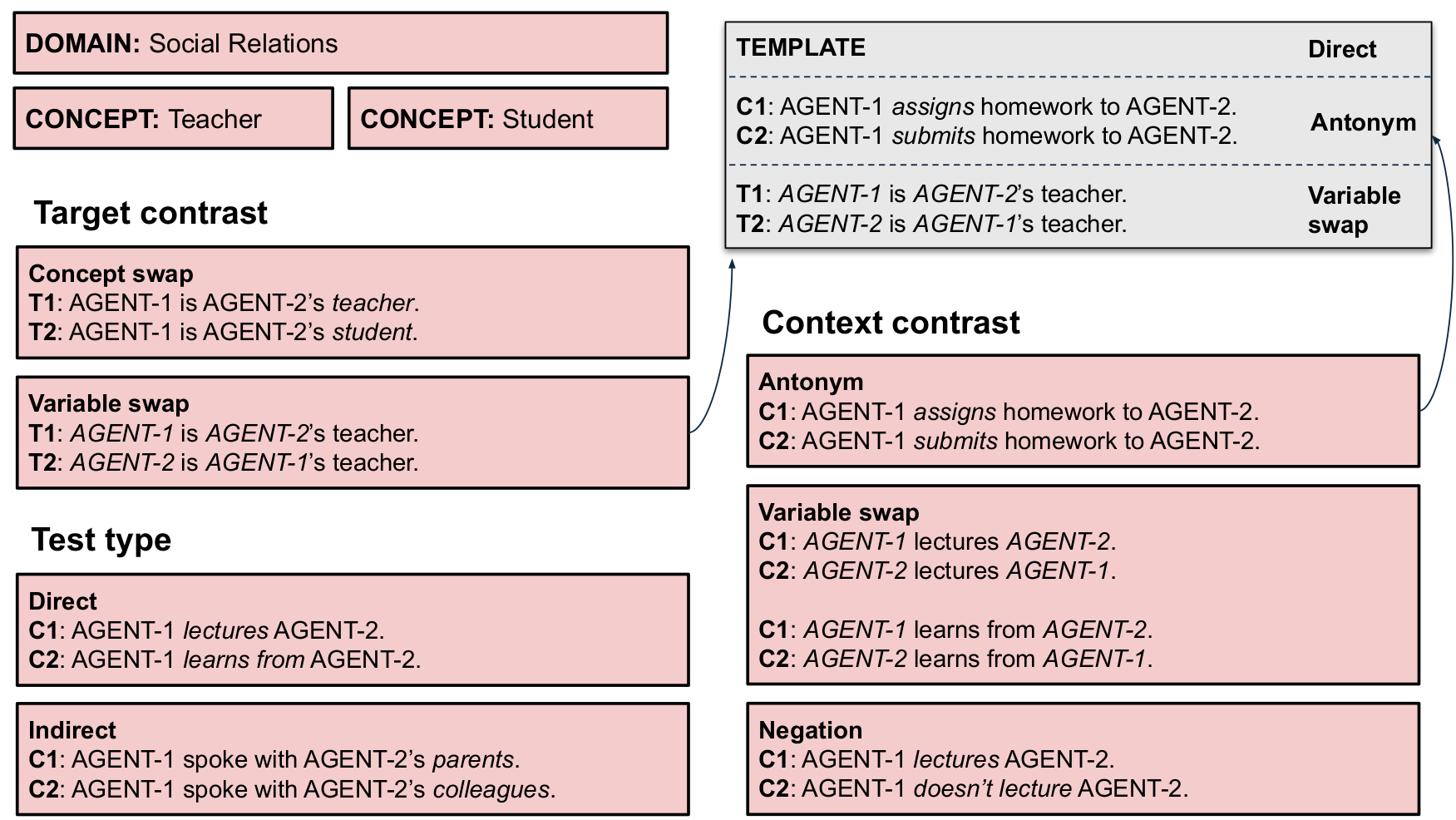

Types of minimal pair contrasts in Context and Target pairs. Examples shown here are for one domain and concept pair. Templates may be direct, testing concepts explicitly, or indirect, testing concepts implicitly using likely scenarios (e.g., a student is more likely to talk to a teacher's colleagues rather than parents). Context and target contrasts reflect how concepts are tested. For instance, antonym contrasts words with opposing meanings, negation leverages "not", and variable swap exploits relative ordering of entities.

EWoK uses a minimal pairs-of-pairs design, so each context can be plausible given one of two targets for an item. C1 (but not C2) is plausible with T1, and C2 (but not C1) is plausible with T2. With different combinations of concepts, contexts, and fillers, EWoK is capable of generating arbitrarily many controlled datasets, making EWoK widely useful for evaluation!

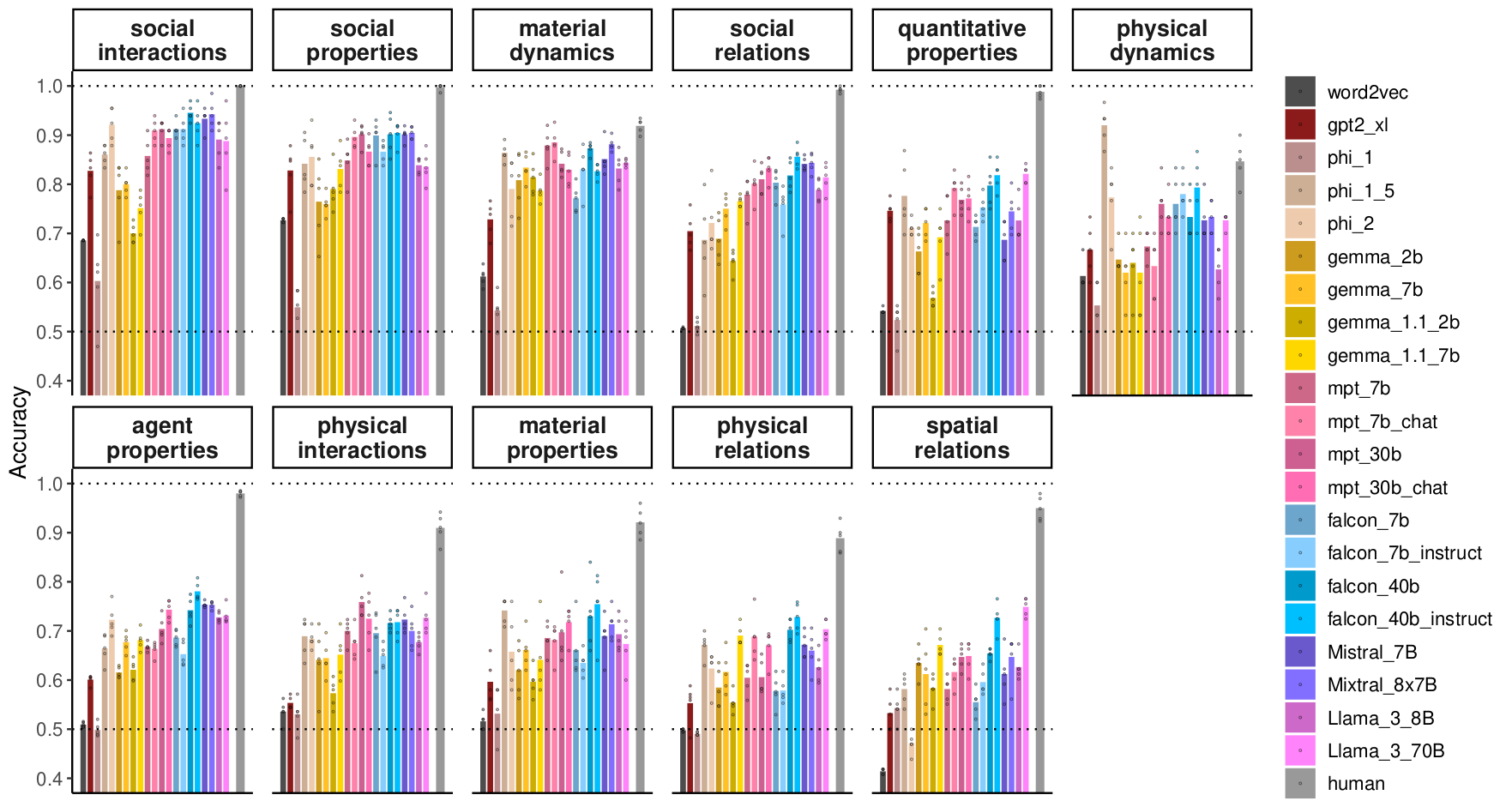

We evaluate 20 open-weights large language models (LLMs) on EWOK-CORE-1.0, a dataset of 4,374 items covering 11 world knowledge domains. LLM performance varies drastically by domain and is often substantially worse than human performance. Individual LLMs mostly show similar performance patterns across domains, but these patterns are not always consistent with the human pattern. In the figure, the dotted line at 0.5 denotes chance accuracy. The light gray rectangle shows the range of human accuracy across 5 dataset versions, with the light gray line showing the mean. Each dot reflects LLM performance on a single version of EWoK-core-1.0, with the bar reflecting the mean across the 5 versions.

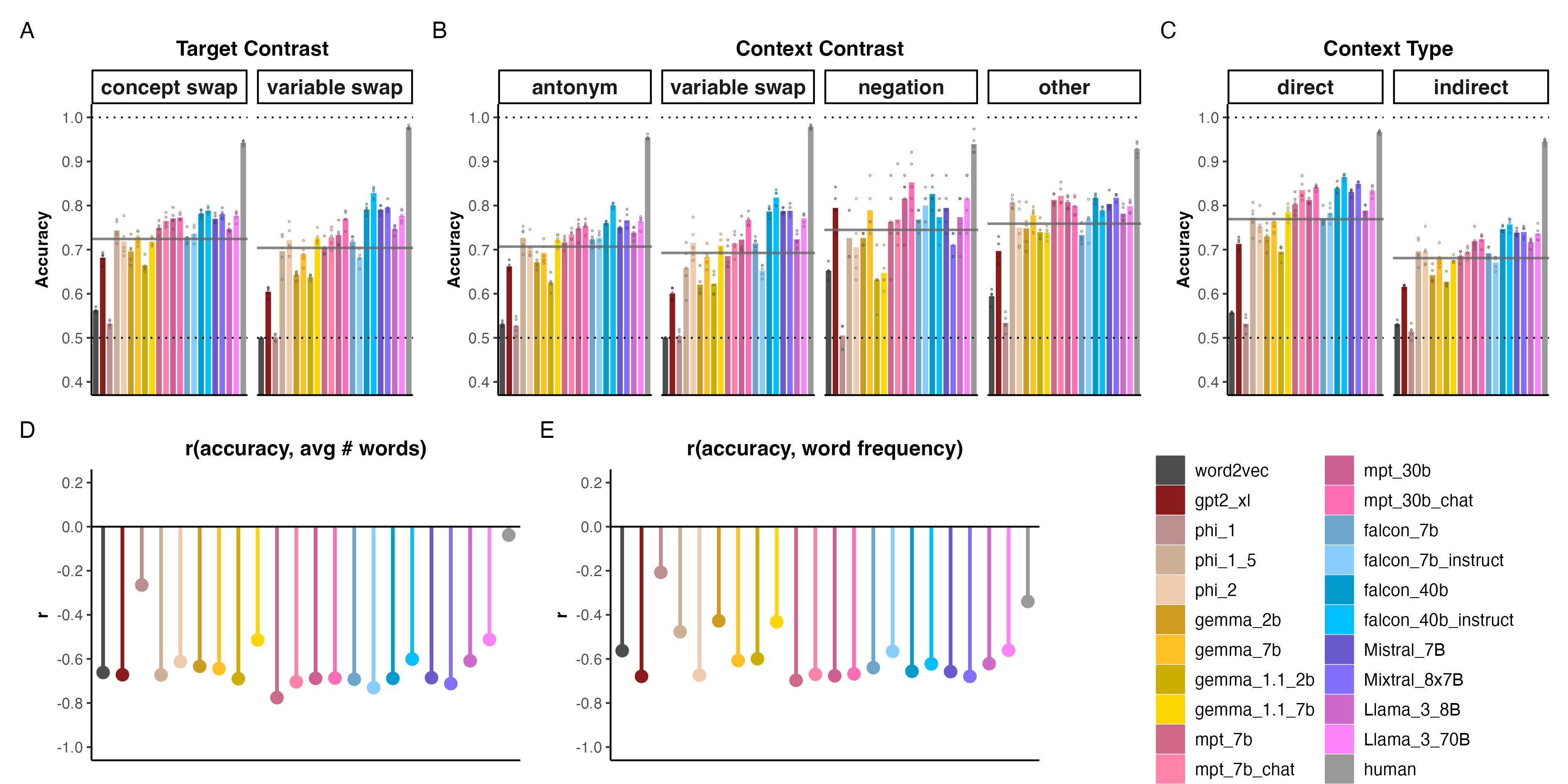

Top: LLM and human performance across target contrast (A) context contrast (B) and context type (C), evaluated with LogProbs. Dark gray line shows average model performance. Bottom: correlation between LLM accuracy and surface-level item features: (D) average item length and (E) average word frequency in the item. Humans are not sensitive or only weakly sensitive to these features, whereas model performance strongly correlates with them. The (counterintuitive) negative relationship between accuracy and word frequency is driven by the fact that hard domains happen to have high word frequency and is reversed once domain is controlled for.

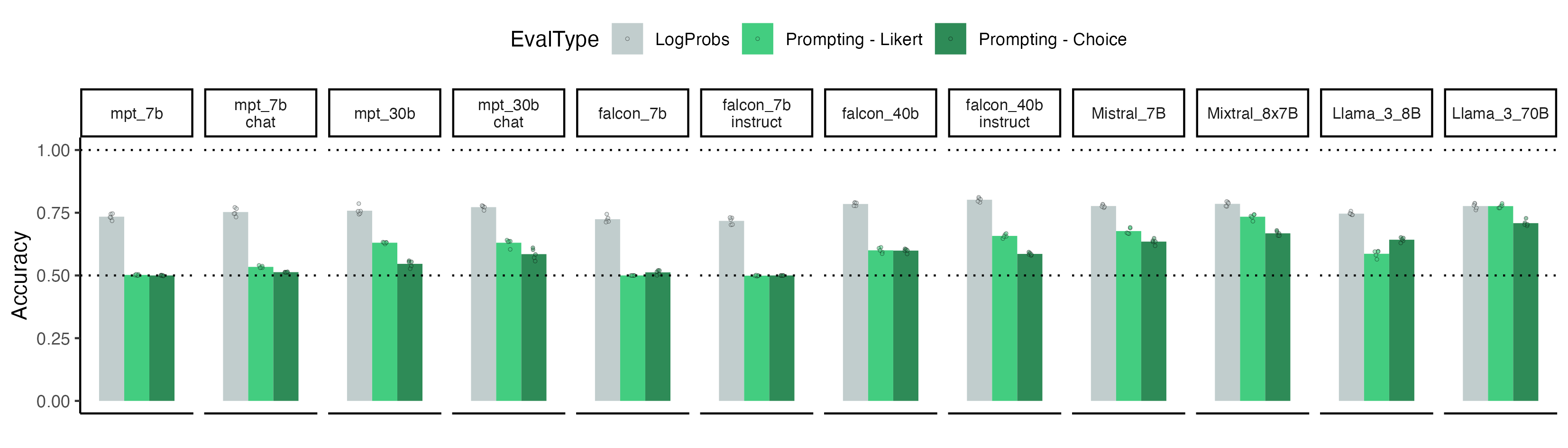

LLM performance assessed with LogProbs vs. two prompt-based tasks, Likert and Choice. The prompting was 2-shot, and the outputs are constrained to allowed values (1---5 for Likert, or {1, 2} for Choice); this setup was chosen to maximize model performance. LogProbs is a better strategy in nearly all cases. Within the two prompting tasks, it was common for models to always generate the same value, e.g., “1”, for any item. Our metric was designed such that even in this scenario, there would be a 50% baseline. Looking at Likert, without this safeguard and requiring strict inequality, the top performing Meta-LLama-3-70B model drops to 59%. MPT-7B, which performs comparably on LogProbs and Choice, drops to 2%. Thus, these data are far from “solved” with prompting in the general case.

EWoK is useful not only for evaluation, but allows for future targeted research on conceptual

understanding in LLMs. Our controlled items allow for causally manipulating the context shown to models

for the same target text, changing internal representations in a way that might lead to an opposite

judgment on the same text.

The current iteration of our dataset is in English; LLM performance is likely to be

lower in other languages, especially under-resourced languages. Adapting the EWoK

framework to other languages will be important work and may require redesigning the

set of concepts and materials we use.

In addition to doing the work of developing a novel evaluation framework and challenging dataset to test world knowledge, we lay out best practices for evaluation data use: our framework and dataset are licensed CC-BY 4.0 with Terms of Use (1) requiring attribution if used to train models, and (2) prohibiting plain-text release of EWoK-generated data on the internet to prevent accidental inclusion in large training corpora. We would like to encourage a community standard of clearly reporting what models are trained on, and appropriately providing attribution where it is due---both, for careful science, and out of respect for the creative work of others. You may notice we refrain from evaluating any closed API-only models for this reason.

@article{ivanova2025elements,

title={Elements of World Knowledge (EWoK): A cognition-inspired framework for evaluating basic world knowledge in language models},

author={Ivanova, Anna A and Sathe, Aalok and Lipkin, Benjamin and Kumar, Unnathi U and Radkani, Setayesh and Clark, Thomas H and Kauf, Carina and Hu, Jennifer and Pramod, RT and Grand, Gabriel and others},

journal={Transactions of the Association for Computational Linguistics},

volume={13},

pages={1245--1270},

year={2025},

publisher={MIT Press 255 Main Street, 9th Floor, Cambridge, Massachusetts 02142, USA}

}